#neworleans #savannah #charleston #columbia #raleigh #richmond

#digitalnomad #travel #usa #cultureshock #reversecultureshock #amtrak #publictransit #bus

Reverse culture shock is when you return to your own country after going abroad and have the feelings of strangeness and bewilderment that you had when you arrived in other countries. My first experience with reverse culture shock was after a month long trip to Japan in which I lived with a host family and went to language classes. When I arrived at the airport, I was aggravated that everyone stood right up the baggage belt, even when their bags weren't there, rather than giving space for others. Then they wouldn't make space on the escalator for people to walk by. But those are super minor things. The next thing that I found was that the smell of American food made me nauseous. I couldn't eat my favorite pizza because the greasy smell made me want to puke. I had a hard time eating anything for a month.

As of January, 2025 I've come back to the US after traveling for a year and half in Latin America – and boy, do I have some reverse culture shock now. And I still do five months later. Some of the first things that I noticed were that the conversations that I overheard were much more rudimentary than what I was used to, to the point that I thought, “Wow, I'm really feeling that national average third grade reading level.” But maybe that was me just being in a bad mood and being a bit dick-ish. The summary of the main difference in conversations in the US vs elsewhere was, as you could probably guess, the political commentary nature of it all.

People all over will occasionally bring up politics. In Ecuador, I was told of various administrations, how one government started on a very populist/socialist note in rhetoric and then took office to become the most corrupt government they'd had with none of the populism. I guess that never changes. In Argentina, things were fresh under Milei's inauguration and austerity policies. Most people I spoke to were upset and concerned and protests against the privatization of universities were everywhere. But some were hopeful of the promise of stability after hardship. Did you know that in the past the Argentinian government tried to privatize universities and people occupied the universities with guns and shot at police to stop it? That's a story.

Back in the US, everything I heard was parrots of major media outlets. People seem to have strong opinions of things without actual understanding of how they work. Perhaps that's universal, though I doubt you could get most Americans to define Capitalism without the use of words like “freedom”, “hard work”, “dreams”, or “justice.”

There seems to be more anger here

While traveling through more than 20 countries in my life, I've never had any bad experiences in hostels with other guests. When I came back to New Orleans, it was almost part of the routine that people would get into tiffs with each other, with staff, with people around them. Courtesy in shared spaces seems to be on a different definition than what I expected. Also, dudes in the US snore more and more loudly. The men's dorm rooms also smell worse. My god, do they get musky quickly. That wasn't a thing nearly as much in Latin America. Sure, some people snored, but Americans take it to a whole new level – even to the point of me checking to see if my ear plugs are working.

But there were other instances. At most hostels I stayed at in New Orleans there was some kind of drama between guests, staff, and workers, in all directions, on a regular basis. There are a couple of hostels in New Orleans that I'm just not going back to. I've never done that before. But it's also the same with people there in general. Even riding a bike along side someone on a road in New Orleans, the guy started yelling at me. I pulled up next to him because the road wasn't safe to ride on and I thought I made a biking buddy.

Thankfully, other places in the US aren't as high-strung as people in New Orleans. I do want to say though, there are plenty of kind people, but there is also a lot of poverty and stress. More-so in the US than in other places I've been, even super poor ones. There are a lot of reasons for that, I think, and poverty being almost illegal here doesn't help. At least other places allow shanty towns, so people can get SOME kind of shelter. I'd see people hanging out nice looking work clothes to dry outside of some piled up bricks and a rudimentary roof. They were poor and doing what they had to get by with what they had. It's not ideal, but it's better than the option in the US, which is none.

But what about some of the good stuff?

There have certainly been good aspects of traveling back in the US and I don't want to spend an entire blog bitching. The food, music, and culture in New Orleans is still some of the best, and despite its problems it's still one of my favorite cities globally. I've also been traveling up the east coast along the Amtrak, so I'll share some of that.

Savannah, GA

I started my rail-road tripping after Nola in by flying to Savannah, GA. A lot of people praise the city for being beautiful and like a nicer version of New Orleans. It certainly has a similar feel. I wasn't even tripping over the sidewalks!

wrap around porches!?

wrap around porches!?

I wish I had taken better pictures, but you can find professional ones on the internet, anyway 😜. From there I arrived at my B&B, as there is no longer a hostel in Savannah. It looks like it got the Covid.

Savannah's Central Park (Right behind here is the monument to slavers though)

Savannah's Central Park (Right behind here is the monument to slavers though)

rocking chairs at the bus stop!

rocking chairs at the bus stop!

Also, you get welcomed into town at the airport with rocking chairs while you wait for the bus into town. That's some southern hospitality right there! Not only that, but the bus takes you to an actual bus terminal with coverings, signs, wait times, etc. It actually treats you with respect, something unusual in American cities. I loved it, but was in a rush to transfer and couldn't get a photo. I need to improve my photo taking if I'm going to write a blog.

I also arrived during the Savannah Music Festival, where artists come from all over the country and even internationally to play here over several weeks. It was a very rich cultural experience.

They love the arts here! Look at that $2,500 donation!

They love the arts here! Look at that $2,500 donation!

So why is the largest monument in Savannah to slavers?

This thing is like 30' tall and protected by an iron fence while next to it is a monument to volunteers in the World Wars that's like 4' tall. What a way to show your priorities. There is a lot of rich history to be proud of. Why the insistence on memorializing the worst aspects of humanity, I'll never understand. Savannah isn't the only one, of course.

But that being said, there's also a really good African history museum that you should check out. It's small, but it has a lot of original and replica pieces you can view in a tour. Mine was given by a history graduate student and she did a fantastic job! I highly recommend a stop there.

photo of an African statue. It features a mother with long breasts supporting two children. The long breasts were an indication that she had raised surviving children and was a mark of honor.

photo of an African statue. It features a mother with long breasts supporting two children. The long breasts were an indication that she had raised surviving children and was a mark of honor.

You can really have a good time walking around not just the town center, but the old town as well. They did unfortunately rip up the tram lines and replace some of them with buses that look like trams. Public transit was actually workable for a lot of areas that I was staying in and I used it a good bit. Cycling is also a good option here, as most streets aren't too busy. I love the parks in the city and the overall planned layout.

Charleston, SC

From there I went to Charleston. There IS actually a hostel here! Woohoo! It also has a 7 day stay limit. Not super great if you're nomading and working.

Charleston is beautiful, but also super touristy. It seems like there aren't a lot of locals who live in the old town anymore. Most residential looking buildings that I saw seemed to be AirBnbs. Working there was not very easy. There are some cafes you can go to, but in the older cities they tend to be smaller and not as easy to work out of. The options I used were to go to the city and museum libraries. They had good internet, though the university library, I couldn't get the internet to work for me – likely due to my computer's corporate security, which does happen at times.

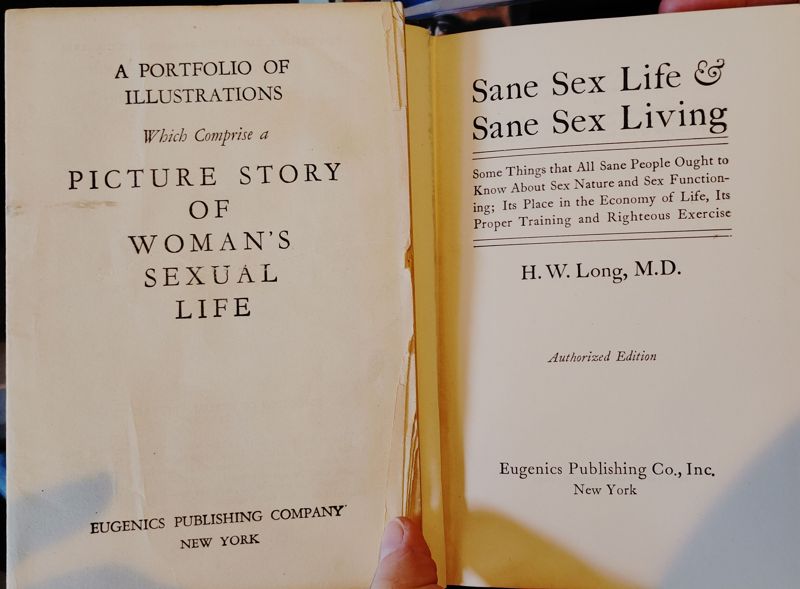

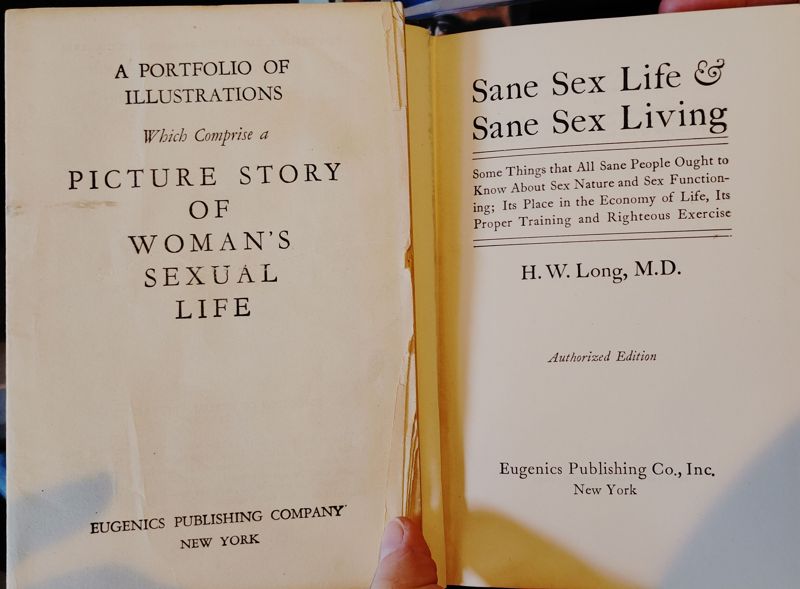

I stumbled upon an old book store that had quite a gem. Look at this one closely. It gets worse the more you look at it 😂.

Charleston has museum alley/road and the city has a ton of good museums, including the African American History museum and the city museum, both of which I highly recommend in addition to the other ones of the area. Charleston seem to take a different attitude about history from Savannah in that they show the history and don't celebrate the awful bits.

The city itself though is pretty much just a tourist hub. If you want to run in to some locals, it seems better to go away from the center a bit. This isn't to say that King Street is bad or anything, but you will find a lot of bachelor/ette parties, spring break, etc. I was walking with someone from the hostel and heard a young-un shouting with his friends that they were going to some bar and my friend and I looked at each other and said, “We're not going to some bar.” I'm old. I know.

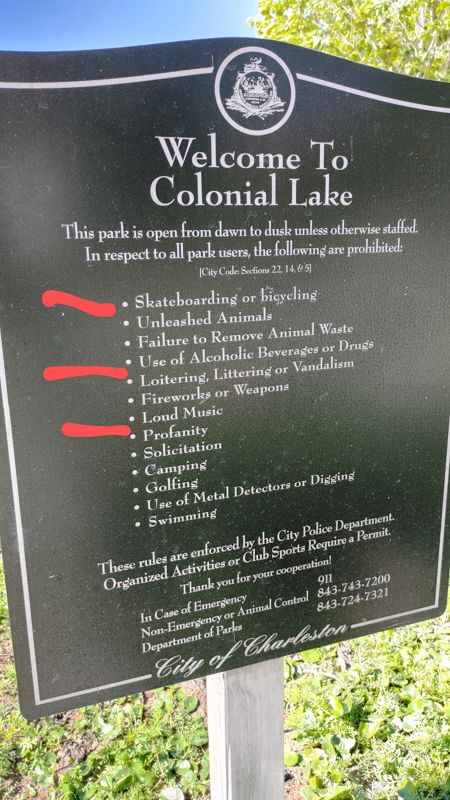

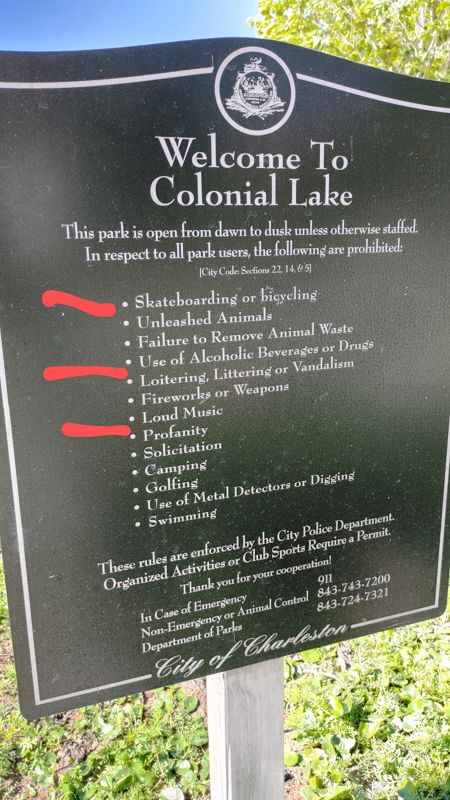

I did like the city, though. They have a really good farmer's market where I picked up both food and a local spice blend that I'm still using. People were friendly, spring time weather was great. I did see something absolutely ridiculous, though, that makes me thing the city is run by a bunch of old farts:

A sign at a park with no loitering!? There are benches here! And no profanity, skateboarding, loud music. Might as well say no teenagers, too!

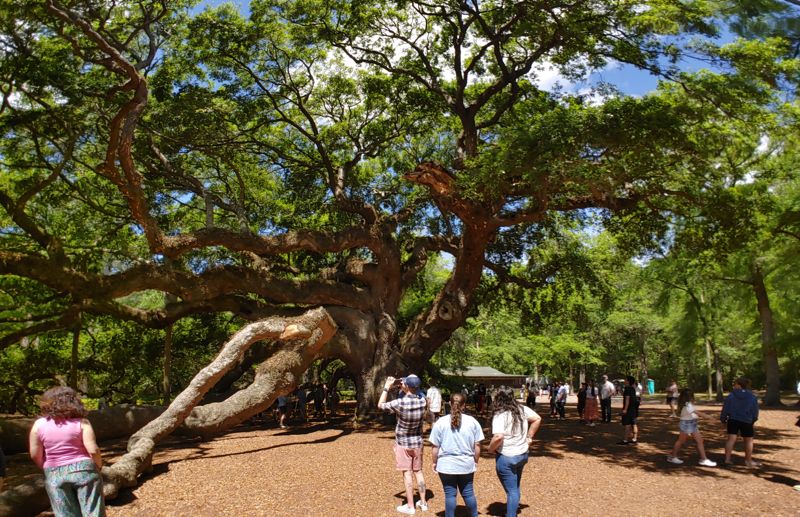

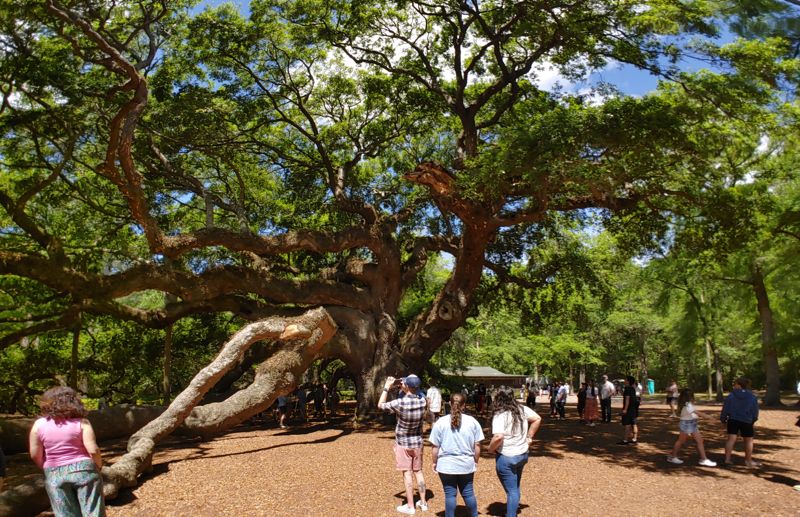

Outside of Savannah is an old oak tree called the Tree of LIfe. You can get there by car only. It's absolutely worth a visit! There's an old gentleman there who paints the tree almost daily. I was talking with a local about the tree and he brought up the gentleman and said that he's gotten pretty good! You can find his paintings at the old french market in town. If you see someone painting the tree on an easle, give him a chat!

The roots of this tree are as large as the branches. That is wild. They are taking care to try and preserve it, as it's fragile in its old age. Some of the large branches have fallen off or been cut and sealed with some kind of sealant.

Columbia, SC

One way to get a feel for a city is the sense or feeling of dignity and respect you receive as you arrive. What I mean is, when I arrived by bus from Charleston because the train involves going back to Savannah and transferring, I arrived not in town near the Amtrak station, but far outside of town at a warehouse. I had to order an Uber to pick me up. The driver told me that they moved the bus stop away from the Amtrak near the town center to outside of town because the city didn't like the “undesirables” hanging out there.

That sense of lack of dignity was a good indicator for my stay here. I don't really like Columbia. The majority of the city is parking lots and while locals say that it's walkable, it's not super pleasant. They do have parks that are pretty decent, but public transit and bike lanes aren't really thing. The lack of a hostel meant that I had to stay at a hotel. I had booked 3 nights, but when I arrived in the city, I wished it had been 1 or 2, but it was fine.

Here's what I mean about the look of the city as you're walking around:

This part of the road is brick, which makes it feel slightly less car oriented, but it's still cars first.

This is one of the more pleasant parts. Notice that this parking lot is an entire city block. Right behind it on the other side of the road is another block sized parking lot. It's spring break at this time, so notice the total absence of automobiles. The actual downtown isn't as pretty, but just as much parking. There's even parking right in the middle of the avenues! It's so dangerous! And ugly. It's so ugly!

The city is just not that pleasant to walk around, despite the fact that there is decent tree coverage, which definitely helps with the heat and sun.

The art museum was closed for renovation, but the park was open near the government buildings with the same massive slaver statue in the center.

When I finally left I walked 20 minutes at 6AM to the train station. I tracked the train on my phone and it was a 1.5 hours late from it's 4:30am departure. When I arrived the others who had been waiting since around 4AM were audibly jealous. Amtrak doesn't really start getting decent until you get to DC.

While I didn't like Columbia overall, I did see a bit of humanity there that reminded me that kindness still exists in people.

Bitty and Beau's

Bitty and Beau's is a coffee shop chain in the region that employs people with down syndrome to run the place. They are treated no differently than anyone else. They actually run the cafe – they stock, take and fill orders, everything. They're not left pushing carts because nobody knows what to do or has no expectations for them. They are treated with dignity there. I love it.

What I gathered is that the founders had two children with down syndrome and made a coffee shop for them to be able to work. They say that 80% of people with these disabilities are unemployed, even though they can do things. They've opened multiple stores around the country.

The yellow pins are the stores and the red are where people are from. The university is here and they get students from all over the world!

They even wrote nice messages for you. 😊

I'm really glad that I found this place.

Raleigh

I really liked Raleigh overall. When I arrived at the city via the Amtrak, I was greeted with a station that looked like it was an old factory. It was really cool – and right on the edge of downtown! It also looks like they're doing some kind of bus expansion right next to the station, so I wonder if that will be for inter-city buses or something. I can't believe I didn't get a picture of the station, it's really nice!

But I did go to a nearby coffee shop got a photo of the barista's Frieren tattoo!

Unfortunately Raleigh also no longer has a hostel. The only place that I could find that wasn't insanely expensive was the Red Roof Inn outside of town to the south in a bit of a no man's land. But! There was an express bus that stopped a few blocks down, so while not pleasant, it was frequent enough to use. Coming back, however, was not pleasant. How do you cross this road!? There are no cars in the photo now, but I had to wait a while to get this photo. This is the major road going out of the city in that direction, so it's always packed with high speed vehicles.

The express bus brings you through town to a really nice bus station. It's where all of the city buses link up right in the center of town. They have TVs with arrival times and announcements and it's totally covered, so you're out of the heat and elements. It's nice to be treated with dignity.

As far as working goes in the city, it was pretty doable. There were quite a few good cafes to work at and I saw a lot of university students doing work as well. The downtown area was very walkable, so I was able to change locations if wifi wasn't working out or to go get lunch.

They also had a pretty big festival there! There were all of these stalls on this road and at the stadiums on the outside of downtown were concerts. For this festival, you pay for a day pass and get unlimited small glasses of beer.

It wasn't at the festival, but I tried an oyster beer! It was definitely interesting. It had the hints of oysters, but not overwhelming. It's something I'd try once haha.

Earlier in the day I was talking with the owner of this bar, which is Slim's Dive Bar. They were very nice and have good music shows there on the regular!

Richmond, VA

I was originally going to pass on Richmond until an uber driver really talked it up for its architecture. He really emphasized that I shouldn't miss it. I was skeptical – what was I going to get? Slaver shit?

I was actually wrong. Richmond felt oddly progressive compared to some other southern towns 😒 (Columbia). There's no hostel, of course, but I was able to find a hotel for a few days at not too bad of a price outside of town right by the bus line and, to my surprise, it's actually BRT! Like with its own lanes in the middle of the road with platform level boarding and everything! And totally free! Waaaaaaaaaaaaaaaaaaa!

The back glass walls are really cool, with maps of the route and neighborhood. The QR codes are supposed to be information on the area, but they didn't work for me.

Raleigh has really good art and science museums. The science museum had an arrow pointing to trains, so you can guess where I went.

The art museum was massive. I kept getting lost in it. Also, the art museum was free except for the Frida Kahlo exhibit (I don't remember if the science museum was).

The grounds around the museum are very welcoming and lots of people were out enjoying the sun, picnics, playing, and relaxing.

Walking around is entertaining as well!

I love the hands!

In addition, I got to see a music show in the old theater. I noticed that the dancing people in the paintings are different on each part. I love the architecture! The music wound up being country, of course. I didn't know what I was getting in to, but I didn't have plans and I saw a show was happening, so I bought a ticket.

There are some cool buildings in downtown too.

Overall the downtown had some cool buildings, though the unfortunate part is there were areas with a lot of closed businesses. There still were some, but I saw two whole blocks with everything shuttered. There were, of course, the confederate white house, a pathetic looking building surrounded by a hospital. It's hard to get to, as it should be. And it was just some dude's house, so calling it the White House is trumping it up a bit. The daughters of the confederacy had their building right next to the art museum. Theirs looked like a mausoleum, which is where their ideology belongs anyway, so maybe that's fitting. I thought I had gotten a picture of it, but I probably decided not to give them the dignity.

I actually enjoyed my visit to Richmond. It felt like it had some actual progressive aspects to it. I had some good food, friendly people, it was not difficult to get in to town with the BRT, and the free transit and museums was super nice! There are other parks and areas to visit that I didn't cover.

I'm glad I visited!

There are more places that I visited as well, but this part is already too long. By the time I was getting to this area, the fascism was ramping up to another level, so the next posts will show some protests!

Comment on Mastodon!

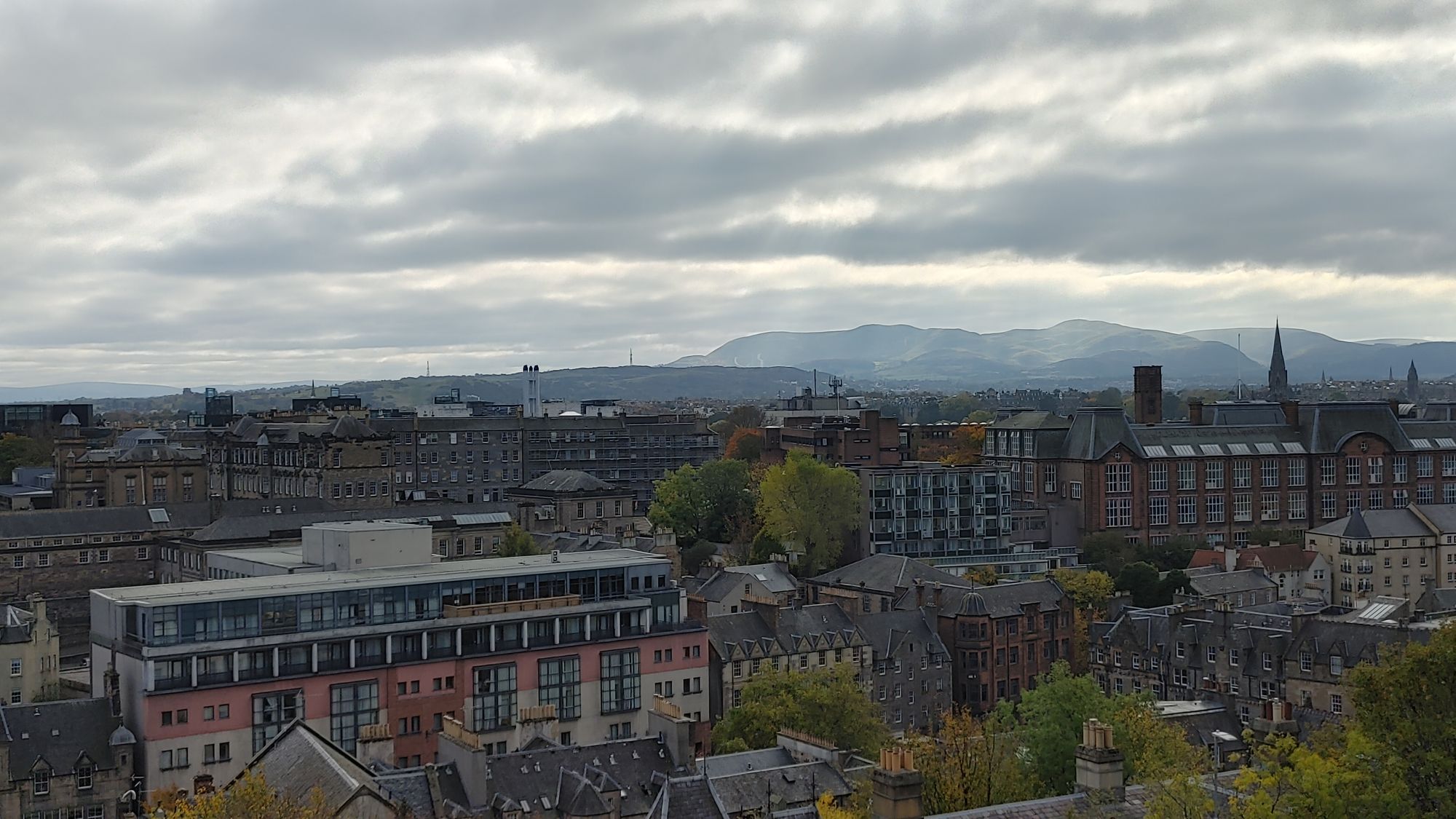

Holyrood park/hill overlooking Edingburgh

Holyrood park/hill overlooking Edingburgh photo from alley overlooking the lake

photo from alley overlooking the lake ocean side park in the nearby town of Danbury

ocean side park in the nearby town of Danbury overlooking Edinburgh

overlooking Edinburgh night shot overlooking Edinburgh

night shot overlooking Edinburgh wrap around porches!?

wrap around porches!? Savannah's Central Park (Right behind here is the monument to slavers though)

Savannah's Central Park (Right behind here is the monument to slavers though) rocking chairs at the bus stop!

rocking chairs at the bus stop! They love the arts here! Look at that $2,500 donation!

They love the arts here! Look at that $2,500 donation!

photo of an African statue. It features a mother with long breasts supporting two children. The long breasts were an indication that she had raised surviving children and was a mark of honor.

photo of an African statue. It features a mother with long breasts supporting two children. The long breasts were an indication that she had raised surviving children and was a mark of honor.